Impact

- 7 articles with modernization recommendations were published on the Department’s reference site

- 16 targeted recommendations were produced by Nava on how states can improve their initial UI application and weekly certification

Summary

Unemployment insurance (UI) is a crucial program for partial income replacement during times of financial crisis. But the unprecedented surge in unemployment claims during the pandemic exposed weaknesses within our country’s UI technology. As a result, the U.S. Department of Labor (the Department) and state UI agencies received a historic $1 billion in American Rescue Plan Act (ARPA) funding to help states modernize their UI programs. This was the first time the Department received significant resources to collaborate with states on technology modernization projects. As part of this work, the Department partnered with Nava to spearhead qualitative research with claimants, state workforce agencies, UI experts, and community-based claimant advocates that resulted in targeted recommendations for how states can modernize their UI programs.

To learn about how we partnered with the Department to publish a report on the ways states are utilizing ARPA funds, read this case study.

Approach

Because every state’s UI program is different, the Department knew that universal recommendations wouldn’t be helpful. Instead, the Department focused on providing standards for successful modernization and resources to help states meet these standards.

Our work aimed to provide recommendations that would improve customer experiences for UI claimants, which can promote trust in government and increase access to benefits. To achieve this, we helped the Department conduct extensive user research to identify recommendations for improving the initial UI application and weekly certification. We then turned our recommendations into prototypes and tested them with UI claimants and applicants. Finally, we published our learnings in several articles on the Department’s reference site so that states can leverage them when modernizing their UI applications.

Outcomes

We published seven articles on the Department’s reference site detailing our recommendations. Each article includes visual examples and links to additional resources.

Process

In order to gain an understanding of the UI space, we began by researching state UI applications, Tiger Team recommendations, meeting notes from claimant advocates, and other resources on UI. This helped us identify claimant pain points during the application process, which we used to define research questions, like “What common mistakes do claimants make on their UI applications?” We also assembled a set of early recommendations on how to improve initial UI applications and weekly certifications.

From there, we conducted one-hour focus groups with stakeholders in the Department, state agency staff, claimant advocates, and other actors within the UI space. During each session, we presented our recommendations and got feedback on how they would work in practice. Then, we iterated based on the feedback, presented our revised recommendations, and iterated again. This enabled us to turn our recommendations into prototypes, which we tested with 18 applicants, claimants, and potential claimants. We compensated research participants for their time.

Our last step was to distill our learnings from usability testing into resources for the Department’s reference site. Before publishing these resources, we got feedback from our stakeholders to ensure the articles were accurate and useful.

Identifying and refining recommendations

The following illustrates how we leveraged user research to go from initial learnings to one targeted recommendation.

In our early research, we discovered that many people struggle to correctly enter their wages during recertification. Based on that learning, we developed research questions to ask during focus groups and usability testing. For example, we asked participants: “What questions do claimants need answered when considering reporting income?” Their answers led us to explore the idea of a wage calculator that could help beneficiaries accurately enter their income.

During our focus groups, subject matter experts from the Department, state agencies, and other organizations in the UI space helped us learn more about wage verification. For instance, we learned that some workers, such as truck drivers who are paid per mile, would struggle to use a wage calculator because their income is not time-based.

We also conducted market research to understand other approaches to wage calculators and we explored private sector solutions where people report income, such as TurboTax.

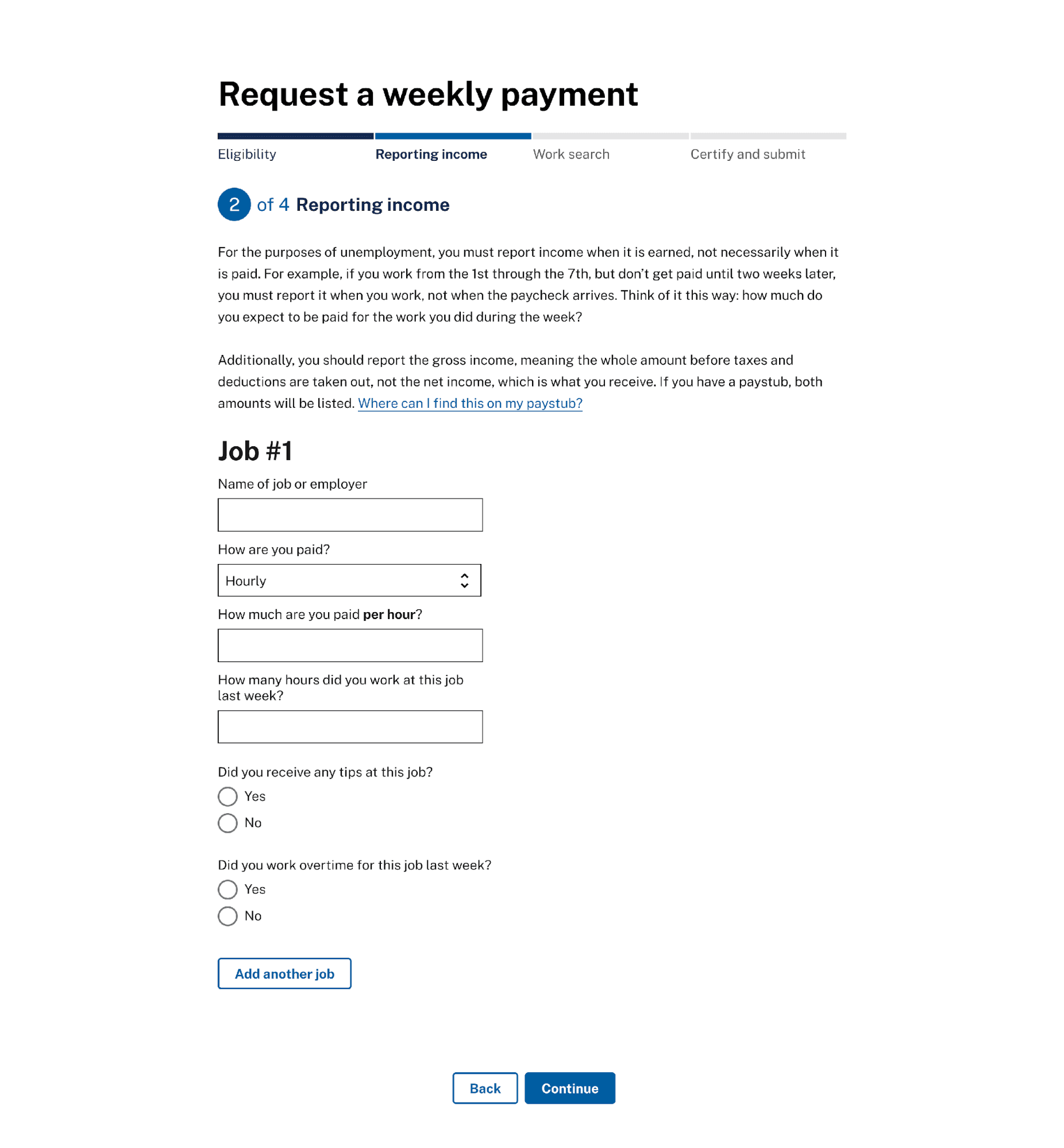

The prototype

Our learnings from focus groups, claimants, and market research helped us refine our recommendations and build a prototype wage calculator. The prototype includes an optional wage calculator that participants can select when filling out their weekly certification. If a participant clicks on the wage calculator, they’re met with instructions on how to use it and what information to enter.

A screenshot of the wage calculator prototype.

We tested the prototype with claimants, asking questions such as:

What questions do you have about what you should do next?

How would you figure out how much income to report?

In a real scenario, would you click on the “Help me calculate this” link or just enter an amount? Why?

Can you think of any kind of work or pay that wouldn’t be covered with these kinds of questions?

Usability testing led to several important discoveries. Importantly, we found most participants wanted to use the wage calculator, especially in situations where their earnings were too complicated to calculate. We also learned that any wage calculator should be optional so that claimants can correct the amount after using the calculator.

These learnings informed our final recommendation to provide an optional wage calculator that can help people accurately enter their income. Along with the calculator, we suggest that agencies provide applicants with contextual help and follow-up questions to ensure they accurately enter their income. You can read more about our recommendation in this article.

Conclusion

Through $1 billion in ARPA-funded investments, the Department and state UI agencies have successfully worked together to help states begin modernizing their UI programs. Our team partnered with the Department on a qualitative research project that resulted in modernization recommendations for states. With these efforts, we’ve supported the Department in fostering a stronger UI system that offers claimants better customer experiences.

Written by

Senior program manager

Senior Editorial manager