Building easy-to-use and accessible government services for all requires that everyone—including the one in four Americans living with disabilities—can access a service’s information and functionality. Limited sight, a cognitive impairment, or the inability to grip a mouse should not interfere with someone’s ability to access digital services.

While digital accessibility has gained more prominence in the last few years, product teams typically conduct a general accessibility audit after they build a product. This is often completed by one person going through the application on their own using an accessibility checklist. Engineering teams then receive a list of high level accessibility errors to fix in a short amount of time. After working toward launching a product for months, this list can seem like a headache. It can also delay a project if parts need to be rebuilt or redesigned to meet accessibility requirements. It may not even be realistic for all of the issues to get fixed before launch, and in the worst cases, a team will release a product that many people can’t actually use.

Nava’s experience creating an accessible online application for a state’s Medicaid for the aged, blind, and disabled (ABD) supplement showed us that using an agile approach to testing for accessibility issues creates a more open environment conducive to learning about these issues early and how to fix them. When team members are given the context for accessibility issues while they are actively working on a feature, there is more room for open communication and problem solving than when everyone is stressed trying to get the project out the door. Bringing accessibility into the build process creates more accountability among the entire team, and more visibility into why these issues matter.

Our project and accessibility requirements

Nava created an online form for the Medicaid for the aged, blind, and disabled (ABD) supplement for a state that serves nearly 650,000 people. Given the nature of our project, our goal was to make the application accessible and available to everyone in the state on their own devices. As with any federally funded project, we had to meet Section 508 requirements, which mandates that federal agencies are accessible to all.

Section 508 requires compliance with Web Content Accessibility Guidelines (WCAG) 2.0 AA. The WCAG 2.0 consists of four main principles:

Perceivable: Information and user interface components must be presentable to users in ways they can perceive.

Operable: User interface components and navigation must be operable.

Understandable: Make text content readable and understandable.

Robust: Content must be robust enough that it can be interpreted reliably by a wide variety of user agents, including assistive technologies.

Each of these principles has technical, actionable rules that an application can be tested against. Our minimum goal was to meet the requirements for Section 508 and WCAG 2.0 AA. While these requirements do not cover every possible accessibility recommendation, they set a baseline for our accessibility testing. Setting the bar at this level gave us a clear place to start with all of the possible accessibility improvements. Future iterations can improve on what we accomplished in this phase.

Check for accessibility throughout the entire process

Because accessibility is a complex issue, it touches on every domain area in building a web application. That means that accessibility should be tested and accounted for throughout the entire process, from as early as the design stage to the building stage.

Testers, engineers and designers need to work together to identify and develop the appropriate solutions. This cross-communication is obviously essential, but we also realized sharing what we learned with all stakeholders was critical. Through regular meetings and check-ins, we were able to develop a shared understanding of common accessibility failures and how we can scan our code for potential accessibility bugs.

Whoever finds an accessibility issue, whether it be designer, developer, or tester, should bring it up in conversation, such as during a daily standup. This leads to awareness and education among the team. Communicating openly about issues, especially when the feature is actively being worked on, brings the team on the same page about why this issue needs to be addressed, and brings context for future work.

Use existing design systems

In the early stages of the design project, our team used the CMS design system to create mockups for the ABD application. CMS design system components are accessible out of the box, giving us a head start on many common design issues, such as form field labeling, error message placement, and proper modal dialog actions. Designs were checked for common accessibility problems such as color contrast and plain language.

But using a library built with accessibility in mind such as the CMS design system doesn't automatically guarantee our custom app met accessibility standards. For example, we found one issue with the way we display certain buttons in our form, which we flagged to the CMS design system as a suggested improvement.

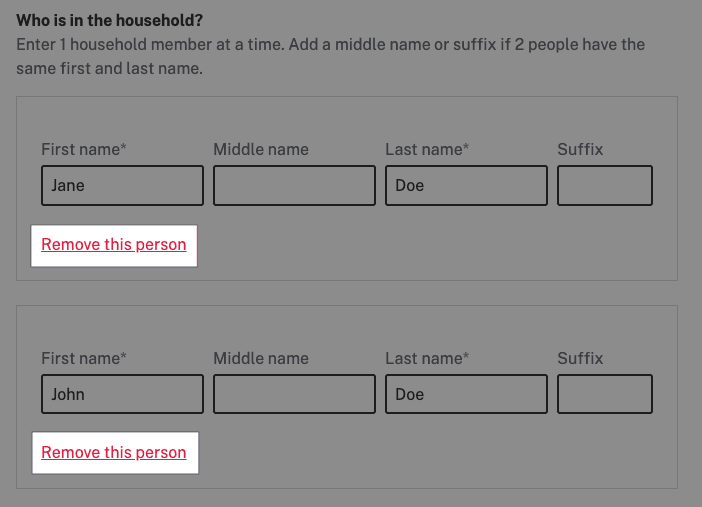

One of the first pages of the MABD form includes a simple form for visitors to add members of their household. The form shows a card with text inputs for first, middle and last names for each new member and each card has a button to remove the person from the list. The button has the following copy: "Remove this person."

Because this remove button contains the same text for multiple potential household members without distinguishing which one is selected, our team's accessibility expert, Julia Maille, identified this as an accessibility finding, citing WCAG's Link Purpose rule. Although many users can easily match the remove button with the corresponding member, visually impaired users who rely on speech reading technology may not have enough context after focusing on the button. They may be confused what member they would be deleting if they select the button.

Without additional context, this removal button might be confusing to a visually impaired person using speech reading technology.

The engineering and design team worked together and were able to pick up Maille's bug ticket and find a solution quickly. The engineers ultimately applied a title attribute to the HTML code that screen reading software recognizes. If the household member row is filled in with a name "Emiliano," the button text reads "Remove Emiliano." Otherwise, the text will include the number in the row of cards in place of the household member's name. In creating this solution, we identified a design pattern we could implement in similar links that need more detailed information.

Documentation for this accessibility fix was also included in Nava’s UI Patterns library, which uses both CMS design system and U.S. Web Design System (USWDS) components and patterns, for this repeatable card component. In future projects, this link change will be automatically included in this type of pattern, eliminating the time needed to find it and adjust it again.

Use multiple tools to ensure you're covering as many accessibility issues as possible

Building accessibility into a project from the start should be done using multiple tools and frameworks, both automated and manual.

During the build process by a development team, our team utilized the same accessible components from the CMS design system. The engineers performed peer testing on their work, some of which included tests like making sure all functionality can be achieved from the keyboard, ensuring it can be used by a wide range of assistive devices.

Usability testing also led us to discover accessibility snags. If a user had difficulty understanding what to do next, or how to accomplish a given task, the website could be described as not operating in predictable ways. Many user experience issues can also be defined as accessibility issues.

For example, we put one of our user stories—or an explanation of a software feature written through the user’s experience–through a UAT (User Acceptance Testing) process. This process included checking the feature functionality in various browsers, checking the responsive mobile view, and running the affected pages through two different accessibility plugins. We used the IBM Equal Access Accessibility Checker, as well as the Web Accessibility Evaluation Tool (WAVE). These plugins pointed out common accessibility errors in the page code, providing the location of the error and what needed to be corrected. We also used the Mac Voiceover tool to simulate the experience of using the site through a screen reader.

While using these plugins helped us find a lot of issues, in general plugins like these only find about 25 to 30 percent of accessibility issues. While using these plugins is a step in the right direction, a hybrid of automated and manual testing is the most thorough approach.

We also found success in incorporating manual accessibility testing in our process. Traditional accessibility audits can be time consuming and ultimately difficult to remediate if teams leave them until the end of a project. Instead, having a dedicated tester look at applicable user stories provided a quicker positive feedback cycle in improving the ABD form.

Additionally, Maille applied the Department of Homeland Security's Trusted Tester framework to her testing process. This system ensures that the tests go through every WCAG A and AA rule to meet the Section 508 guidelines.

Manual accessibility testing can be daunting due to the sheer number of types of issues that can arise. Maille noted that the Trusted Tester system helped her focus on developing plans to test various functionality of the form. "After you learn [Trusted Tester], it was pretty easy to keep repeating and keep redoing all those tests," she said.

As she narrowed down applicable tests based on a new user story or feature, Maille was also able to share the results in a standard reporting template (the Voluntary Product Accessibility Template), which provides reference to the specific rule or violation for additional context. Maille would then try to identify possible solutions. Whether that was highlighting specific lines of HTML or providing screenshots of the issue, she stressed the importance of being as detailed as possible in translating this guidance from complex jargon to something that engineers can understand. Her goal was to make the bug reports as actionable as possible for the engineering team.

Because the agile approach we were using required a lot of ongoing testing, it meant the engineering team could implement more solutions to her findings. “I was able to catch a lot more issues before the project got all the way to the end, when I wouldn’t have had much time to test.” she said. "And it was more thorough doing it this way."

Conclusion

The more our engineering team learned, the more we were able to identify improvements to the CMS Design System that we've since reported. We've offered suggestions based on our new understanding of WCAG guidelines and issued a pull request in one case.

While not every team might have the capacity to implement the level of accessibility checks that we did on this project, we believe including some level of testing and reviewing throughout the project—instead of waiting until the end—will always lead to better accessibility in the end product. And that includes starting to test for things on your own in your own work! You don’t need an official testing process to start looking out for these issues yourself as you work. Something as simple as adding an accessibility plugin to your work process could help with catching a ton of issues before your work goes to the next phase.

Any accessibility issues found should be communicated to the whole team to bring awareness and education. Talking openly about issues, especially when the feature is actively being worked on, brings the team on the same page about why this issue needs to be addressed, and brings context for future work.

Clearly define the scope of the requirements you are filling. If you need to pass Section 508, you need to satisfy the WCAG 2.0 AA requirements. While it’s fantastic if you are able to incorporate more than the requirements, such as WCAG 2.1, which focuses more on mobile accessibility, clearly defining your scope gives you a place to start for the MVP that can make the world of accessibility seem less overwhelming.

Keep yourself open to hearing about new issues and techniques, and know that no one has all the answers. With changing technology and requirements, all we can do is strive to get better. As Maille says, “It's always a learning process. I'm always learning new things, and I'm having to research and look things up.”

Written by

Designer/Researcher

Software Engineer